Know if your AI chatbot works — in minutes.

Upload your conversations. Get a score, plain-English feedback, and a shareable report. No technical setup, no code.

Upload your conversations. Get a score, plain-English feedback, and a shareable report. No technical setup, no code.

Upload a CSV, Excel, or JSONL file with three columns:

Your file should have these three columns:

| question | reference | answer |

|---|---|---|

| What is 2+2? | Four | 2+2 equals 4 |

| Capital of France? | Paris | The capital city of France is Paris |

Create effective evaluation data in 3 simple steps

Add 10-20 questions that represent your chatbot's main purpose. These should be typical user questions.

Include questions from different areas: basic info, complex scenarios, edge cases your users might ask.

Test inappropriate requests, off-topic questions, or queries that should be redirected to human support.

Tip: Mix easy and challenging questions to get a complete picture of your chatbot's performance

Getting clear answers about your chatbot's performance shouldn't be this hard

Reading through hundreds of conversations manually is time-consuming and you miss patterns that matter.

Complex dashboards and metrics that require a data science degree to understand. You need clarity, not confusion.

Your boss wants to know: "Is it working?" Your report needs to be simple, clear, and shareable.

Just drag and drop your file with questions and answers. No complex setup required.

We use AI itself to review your chatbot's answers. Think of it like a smart critic that spots what people would notice.

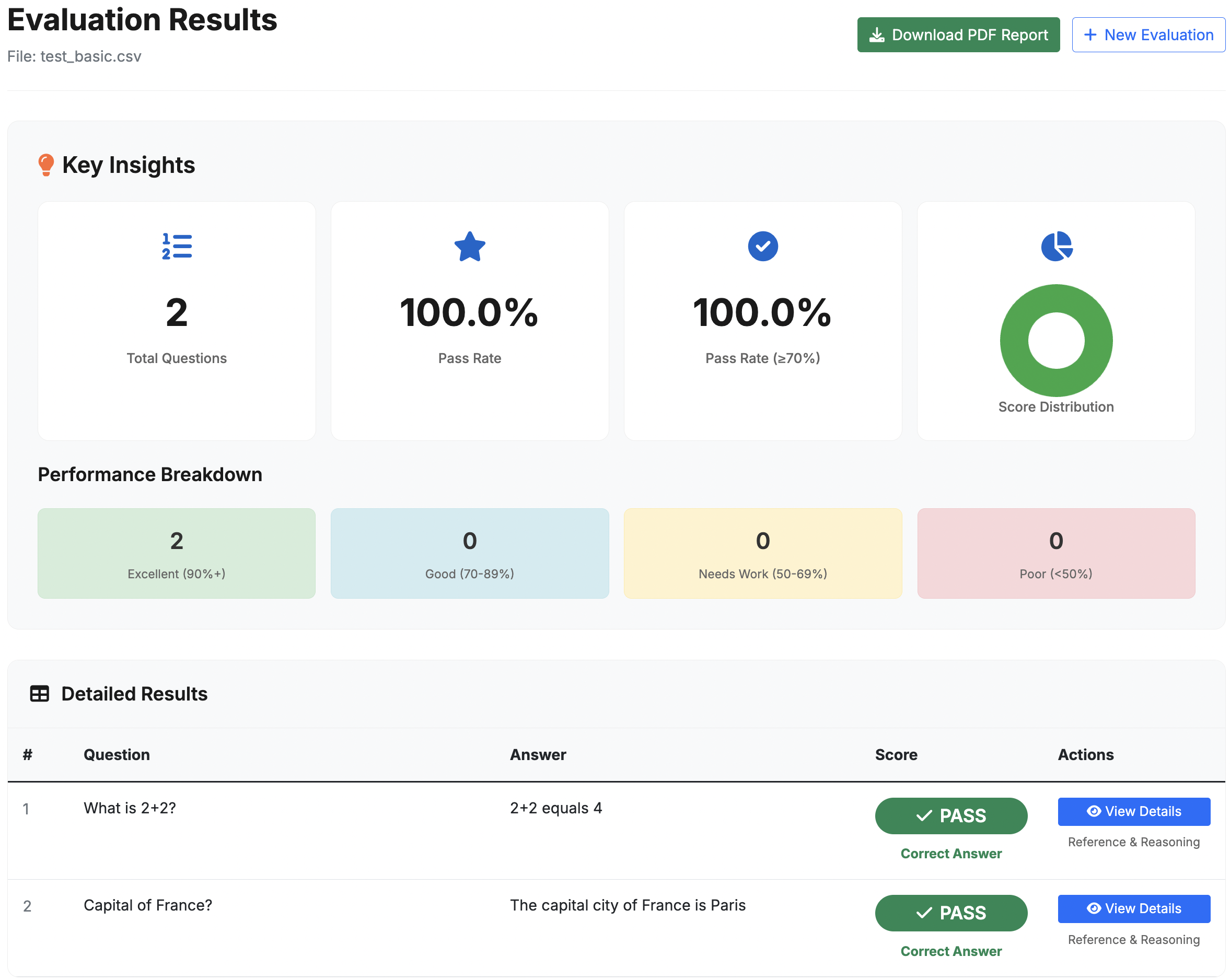

Real evaluation results showing specific suggested fixes for each answer — ready-to-paste improvements with policy pointers

Know if your bot is meeting the mark

Bring a clear report to your next review

Show investors or clients measurable progress